StationBench

A Python library for benchmarking weather forecasts against weather station data. It provides tools to calculate metrics, visualize results, and compare different forecast models.

https://github.com/juaai/stationbench

Category: Atmosphere

Sub Category: Meteorological Observation and Forecast

Keywords

benchmarking forecasting weather

Last synced: about 4 hours ago

JSON representation

Repository metadata

Benchmarking of weather forecasts based on station observations

- Host: GitHub

- URL: https://github.com/juaai/stationbench

- Owner: juaAI

- License: mit

- Created: 2025-01-09T15:00:48.000Z (about 1 year ago)

- Default Branch: main

- Last Pushed: 2025-07-01T11:06:23.000Z (8 months ago)

- Last Synced: 2026-01-27T03:54:29.079Z (27 days ago)

- Topics: benchmarking, forecasting, weather

- Language: Python

- Homepage: https://pypi.org/project/stationbench/

- Size: 660 MB

- Stars: 85

- Watchers: 3

- Forks: 3

- Open Issues: 3

- Releases: 2

-

Metadata Files:

- Readme: README.md

- Contributing: CONTRIBUTING.md

- License: LICENSE

- Codeowners: .github/CODEOWNERS

README.md

StationBench

StationBench is a Python library for benchmarking weather forecasts against weather station data. It provides tools to calculate metrics, visualize results, and compare different forecast models.

Features

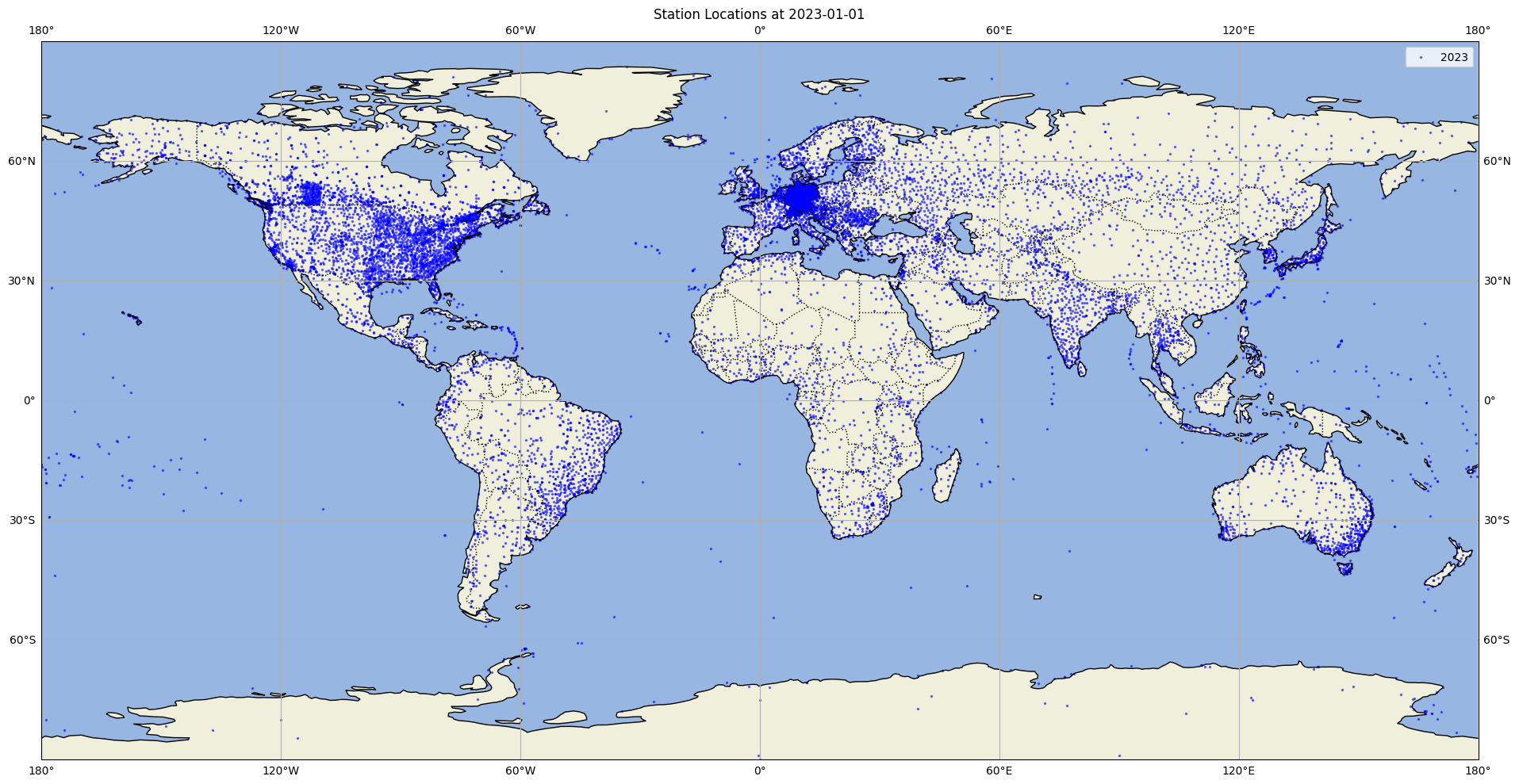

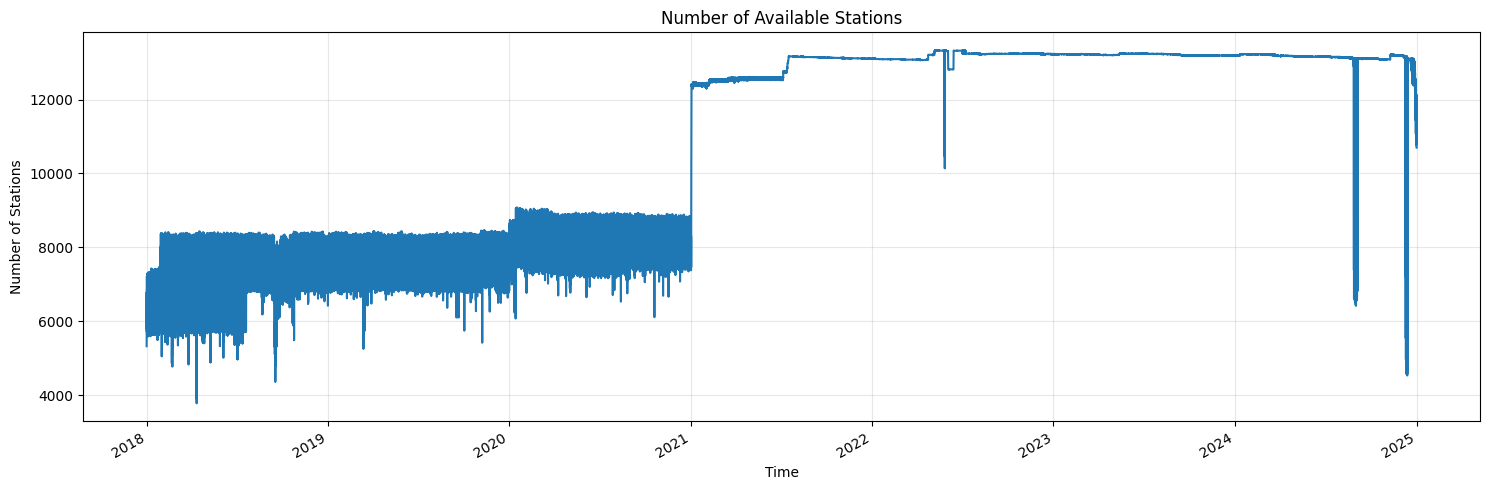

- Pre-processed ground truth data from 10,000+ weather stations around the world included in the package

- Calculate RMSE and other metrics between forecasts and ground truth data

- Support for multiple weather variables (temperature, wind speed, solar radiation)

- Regional analysis capabilities (Europe, North America, Global, etc.)

- Integration with Weights & Biases for experiment tracking

Installation

pip install stationbench

Documentation

Full documentation is available in the docs/ directory:

Quick Start

Data Format Requirements

Forecast Data

- Must include dimensions: latitude, longitude, time

- Variables should include:

- 10m_wind_speed (or custom name)

- 2m_temperature (or custom name)

Ground Truth Data

Stationbench comes with ready-to-use weather stations from around the world. The benchmarking data is a subset of the Meteostat dataset. It contains weather data from 2018-2024 for 10m wind speed and 2m temperature. The data is provided by the following organizations:

- Deutscher Wetterdienst

- NOAA

- Government of Canada

- MET Norway

- European Data Portal

- Offene Daten Österreich

Source: Meteostat (CC BY-NC 4.0)

The benchmarking data can be accessed from https://opendata.jua.ai/stationbench/meteostat_benchmark.zarr.

Besides the provided benchmarking data, you can also use your own ground truth data. The ground truth data must be in zarr format and must include the following dimensions and coordinates:

- Must include dimensions: station_id, time

- Must include coordinates: latitude, longitude

Calculate Metrics

This script computes metrics by comparing forecast data against ground truth data for specified time periods and regions. Output are RMSE, MBE and skill scores for different variables and lead times in the format of the ground truth data.

Options

--forecast: Location of the forecast data (required)--stations: Location of the ground truth data (defaults to https://opendata.jua.ai/stationbench/meteostat_benchmark.zarr)--start_date: Start date for benchmarking (required)--end_date: End date for benchmarking (required)--output: Output path for benchmarks (required)--region: Region to benchmark (seeregions.pyfor available regions)--name_10m_wind_speed: Name of 10m wind speed variable (optional)--name_2m_temperature: Name of 2m temperature variable (optional)--use_dask: Enable parallel computation with Dask (recommended for datasets >10GB)--n_workers: Number of Dask workers to use (default: 4, only used if --use_dask is set and no client exists)

If variable name is not provided, no metrics will be computed for that variable.

Compare forecasts

After generating the metrics, you can use the compare_forecasts.py script to compute metrics, create visualizations, and log the results to Weights & Biases (W&B).

What it does

The compare_forecasts.py script:

- Computes RMSE (Root Mean Square Error) and skill scores for different variables and lead time ranges.

- Generates geographical scatter plots showing the spatial distribution of errors.

- Creates line plots showing the temporal evolution of errors.

- Saves all visualizations and metrics to a directory, optionally logs to Weights & Biases.

Options

--benchmark_datasets_locs: Dictionary of reference benchmark locations, the skill score is computed between the first and the second dataset (required)--regions: Comma-separated list of regions, seeregions.pyfor available regions (required)--wandb_run_name: Weights & Biases run name (optional), if not provided, Weights & Biases will not be used--output_dir: Output directory for results (optional, defaults tostationbench-results)

Usage

StationBench can be used either as a Python package or through command-line interfaces.

Python Package Usage

import stationbench

# Calculate metrics

stationbench.calculate_metrics(

forecast="path/to/forecast.zarr",

start_date="2023-01-01",

end_date="2023-12-31",

output="path/to/forecast_metrics.zarr",

region="europe",

name_10m_wind_speed="10si",

name_2m_temperature="2t"

)

# Compare forecasts

stationbench.compare_forecasts(

benchmark_datasets_locs={"HRES": "path/to/hres_metrics.zarr", "ENS": "path/to/ens_metrics.zarr"},

regions=["europe"]

)

Command-Line Usage

Calculate metrics for a forecast dataset:

stationbench-calculate \

--forecast path/to/forecast.zarr \

--start_date 2023-01-01 \

--end_date 2023-12-31 \

--output path/to/forecast_metrics.zarr \

--region europe \

--name_10m_wind_speed "10si" \

--name_2m_temperature "2t"

[--use_dask] # Optional: Enable parallel computation with Dask

[--n_workers 4] # Optional: Number of Dask workers to use

For small datasets, it's recommended to run without Dask. For large datasets

(>10GB), enabling Dask with --use_dask can improve performance.

Compare forecasts:

stationbench-compare \

--benchmark_datasets_locs '{"HRES": "path/to/hres_metrics.zarr", "ENS": "path/to/ens_metrics.zarr"}' \

--regions europe \

[--wandb_run_name "run_name"] \

[--output_dir "path/to/output_dir"]

Contributing

We welcome contributions! Please see our CONTRIBUTING.md for details.

License

This project is licensed under the MIT License - see the LICENSE file for details.

Metrics

StationBench calculates the following verification metrics:

- RMSE (Root Mean Square Error): Measures the average magnitude of forecast errors, giving greater weight to larger errors

- MBE (Mean Bias Error): Measures the average direction and magnitude of forecast bias. Positive values indicate the forecast tends to overpredict, while negative values indicate underprediction.

We plan to add more benchmarking metrics in the future...

Regional Analysis

StationBench supports several predefined regions and allows you to create custom regions.

For details on creating and using custom regions, see the Custom Regions Guide.

Owner metadata

- Name: Jua

- Login: juaAI

- Email:

- Kind: organization

- Description: next generation environmental insights platform

- Website: jua.ai

- Location: Germany

- Twitter:

- Company:

- Icon url: https://avatars.githubusercontent.com/u/77738808?v=4

- Repositories: 2

- Last ynced at: 2023-07-06T16:35:15.792Z

- Profile URL: https://github.com/juaAI

GitHub Events

Total

- Release event: 3

- Delete event: 24

- Pull request event: 38

- Fork event: 3

- Issues event: 18

- Watch event: 69

- Issue comment event: 10

- Public event: 1

- Push event: 75

- Pull request review event: 64

- Pull request review comment event: 42

- Create event: 22

Last Year

- Release event: 1

- Delete event: 10

- Pull request event: 10

- Fork event: 3

- Issues event: 5

- Watch event: 65

- Issue comment event: 2

- Push event: 23

- Pull request review event: 13

- Pull request review comment event: 7

- Create event: 7

Committers metadata

Last synced: 1 day ago

Total Commits: 39

Total Committers: 5

Avg Commits per committer: 7.8

Development Distribution Score (DDS): 0.487

Commits in past year: 6

Committers in past year: 3

Avg Commits per committer in past year: 2.0

Development Distribution Score (DDS) in past year: 0.333

| Name | Commits | |

|---|---|---|

| leoniewgnr | 4****r | 20 |

| Andreas Schlueter | a****r@j****i | 16 |

| kevinjuaai | 1****i | 1 |

| Niall Siegenheim | n****m@g****m | 1 |

| Alexander Jakob Dautel | h****e | 1 |

Committer domains:

- jua.ai: 1

Issue and Pull Request metadata

Last synced: about 1 month ago

Total issues: 15

Total pull requests: 53

Average time to close issues: 29 days

Average time to close pull requests: 2 days

Total issue authors: 7

Total pull request authors: 5

Average comments per issue: 0.13

Average comments per pull request: 0.34

Merged pull request: 49

Bot issues: 0

Bot pull requests: 0

Past year issues: 15

Past year pull requests: 53

Past year average time to close issues: 29 days

Past year average time to close pull requests: 2 days

Past year issue authors: 7

Past year pull request authors: 5

Past year average comments per issue: 0.13

Past year average comments per pull request: 0.34

Past year merged pull request: 49

Past year bot issues: 0

Past year bot pull requests: 0

Top Issue Authors

- roansong (4)

- leoniewgnr (3)

- deepweather (3)

- aschl (2)

- mrshll (1)

- manmeet3591 (1)

- kevinjuaai (1)

Top Pull Request Authors

- leoniewgnr (34)

- aschl (13)

- howtodowtle (2)

- kevinjuaai (2)

- niasie (2)

Top Issue Labels

Top Pull Request Labels

Dependencies

- actions/checkout v4 composite

- actions/setup-python v5 composite

- snok/install-poetry v1 composite

- actions/checkout v4 composite

- actions/setup-python v5 composite

- snok/install-poetry v1 composite

- 145 dependencies

- ipykernel ^6.29.5 develop

- poetry-exec-plugin ^0.3.6 develop

- pytest ^8.0.0 develop

- pytest-cov ^4.1.0 develop

- ruff 0.4.2 develop

- dask ^2024.1.1

- gcsfs ^2024.2.0

- nbformat ^5.10.4

- pandas ^2.2.0

- plotly ^5.18.0

- python ~3.11

- scipy ^1.12.0

- wandb ^0.19.2

- xarray ^2024.1.1

- zarr ^2.16.1

Score: 6.086774726912307